Smarter RAG Systems with Graphs

Introduction: So You Want Your LLM to Stop Guessing¶

Everyone’s buzzing about Retrieval-Augmented Generation (RAG) like it’s the second coming of AI engineering. And honestly? It kinda is. Your large language model is excellent at confidently making things up—because it doesn’t actually know anything beyond whatever data it was last trained on (hello, 2023).

That’s where RAG comes in: bolting on real, external knowledge so your LLM stops hallucinating and starts reasoning.

But here’s the thing: knowledge isn’t just about facts. It’s about how things connect. And when relationships matter, you don’t want a flat file or a relational torture device. You want a graph.

Enter the graph database. Specifically: Memgraph—a real-time, in-memory graph database that feels like it was built by actual humans for other actual humans, not for a distributed cluster of suffering.

And now, before someone @ me:

I am not a Memgraph employee. I’m not paid by Memgraph. I still stick Post-its on my fridge and call it MemFridge. I’m not being forced by Memgraph to write this article after I pushed the Epstein files into their trial database. I’m not locked in a basement in Zagreb, Croatia—though if you squint, Maghreb kind of sounds like it.

I’m actually writing this while nursing a sore throat and reflecting on whether the AI apocalypse will arrive before I find decent cough syrup.

I’m just a person who builds with RAG systems, has trust issues with stale data, and wants tools that don’t make me cry.

So let’s get into why Memgraph is great for building fast, intelligent, less-fake AI systems—and how it fits into a modern RAG stack without making you sell your soul to Kubernetes.

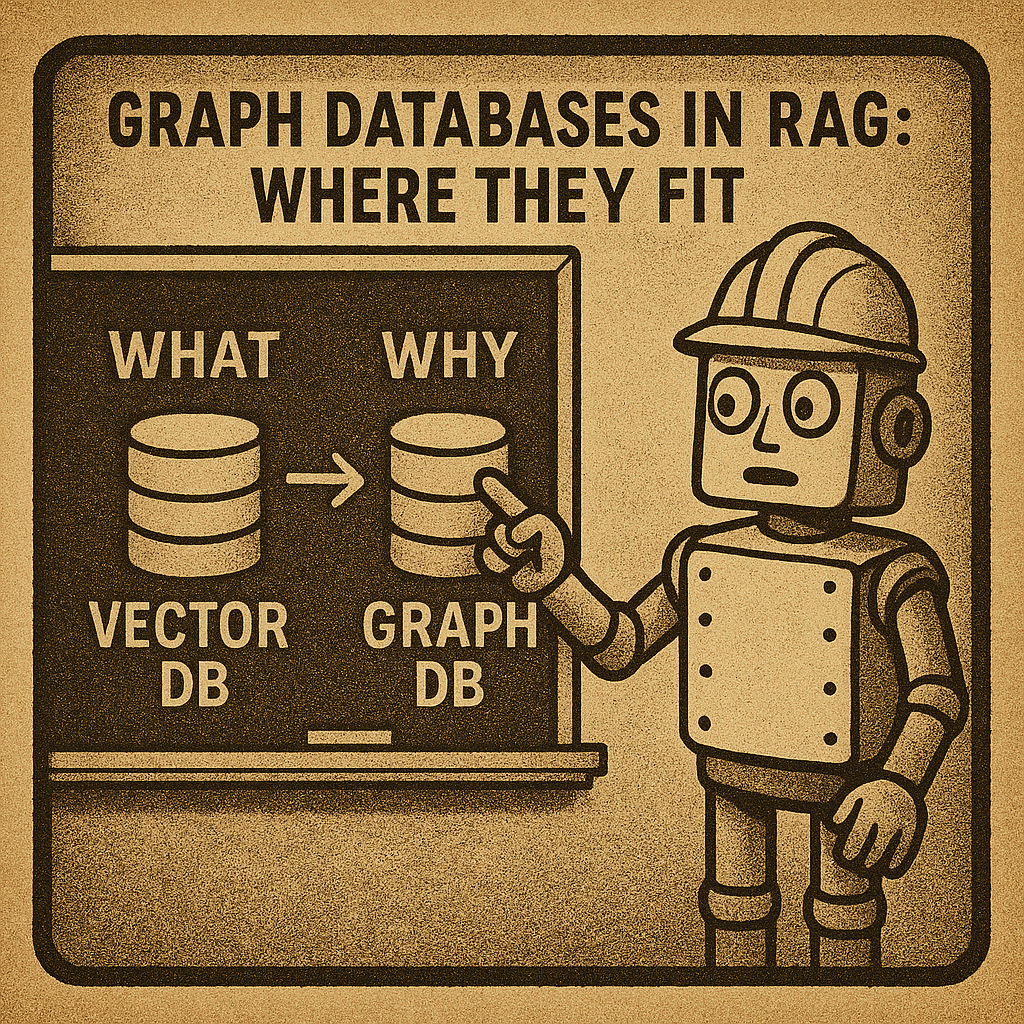

Graph Databases in RAG: Where They Fit¶

Let’s sketch out a typical RAG architecture:

Your vector DB fetches semantically relevant chunks. Your graph DB like Memgraph injects contextual relationships, hierarchy, metadata, and freshness. Combine both, and your LLM isn’t just guessing—it’s understanding.

Use cases:

- Link authors, topics, and papers in a knowledge graph to avoid hallucinations.

- Show product recommendations in context (“people who viewed this also viewed…”).

- Trace real-time fraud patterns across entities.

Vector search gives you “what.” Graph search gives you “why.” And Memgraph gives it to you in milliseconds before the user attention span is over.

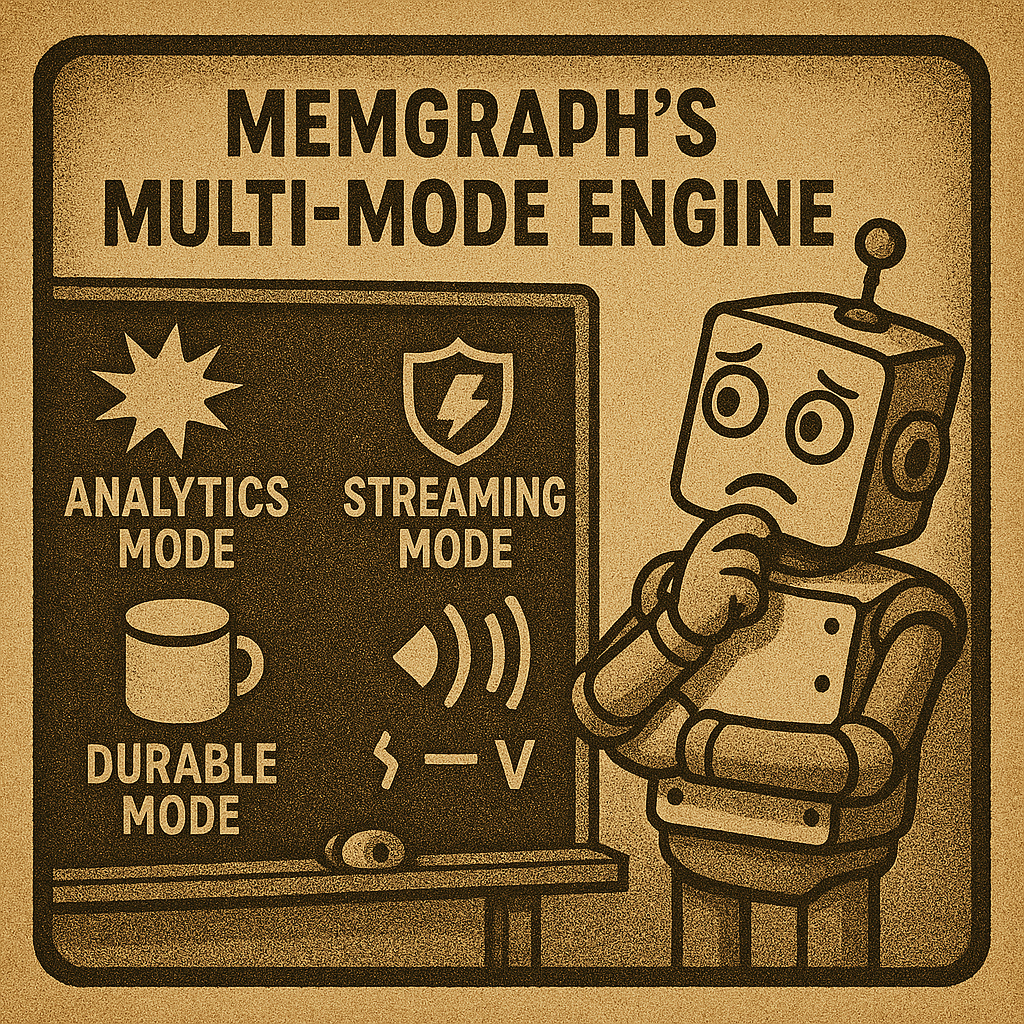

Memgraph’s Engine: Performance Meets Simplicity¶

From a user’s perspective, Memgraph provides modes for different scenarios:

1. In-Memory Transactional Mode (Default)¶

Need speed? This is the Sonic Boom mode.

- Stores all data in RAM.

- Blazing-fast traversals for multi-hop queries.

- Perfect for low-latency, high-throughput RAG pipelines.

Think real-time recommendations, fraud detection, or live knowledge graphs.

2. In-Memory Analytical Mode¶

- Optimized for running complex analytical queries and algorithms.

- Great for heavy-duty graph computations, batch processing, and analytics.

3. On-Disk Transactional Mode (Experimental)¶

- Data stored primarily on disk to handle larger graphs.

- Currently experimental, with lower query performance.

Use this mode cautiously—ideal if your graph outgrows RAM, but be aware of the trade-offs.

Streaming (Always Fresh Data)¶

Streaming isn’t technically a storage mode—it’s your data ingestion method, compatible with any of the above modes.

- Direct Kafka and Pulsar integrations.

- Ingest new data as it arrives.

- Keeps your knowledge graph updated without painful rebuilds.

Imagine your knowledge graph evolving as users interact. Your RAG system stays fresh. Just stream, merge, respond.

Developer Experience: The Stuff That Actually Matters¶

Memgraph is built like someone actually thought about the person using it. You get:

- Cypher support: Same query language as Neo4j. No learning curve if you’ve touched a graph before.

- Prototype-level support for ActiveCypher: A lightweight ORM inspired by ActiveRecord for Rubyists who like danger.

- Memgraph Lab: A GUI that doesn’t make your eyes bleed. Visualize, explore, debug.

- Docs that don’t insult you: Actually helpful. Actually updated.

- Browser-based Playground: No install. Just run and play.

You know how most databases feel like they were built by a committee in 1997 in LaTeX? Memgraph feels like it was built by someone who’s built an actual app before.

Query Examples: Because Words Are Cheap¶

// Get related topics in 2 hops

MATCH (topic:Concept {name: 'Graph Databases'})-[:RELATED_TO*1..2]->(other)

RETURN other.name LIMIT 10;class Concept < ApplicationGraphNode

property :name, type: :string

end

# Inserting a concept

Concept.create(name: "Vector Embeddings")Integration Into a RAG Stack¶

You can slot Memgraph into your AI stack easily:

- Combine with vector DBs like pgvector or even use Memgraph’s built-in vector search.

- Serve via REST, WebSockets, or Bolt.

- Extend with custom graph procedures (in your language of choice, because sanity matters).

Want to update your graph as new documents are embedded? Use streaming integrations. Want to prioritize certain paths based on weights? Use Cypher and built-in graph algorithms. Want to sleep? Use snapshots and WALs with in-memory modes, but remember—data still lives in RAM.

What Could Go Wrong? (Spoiler: Not Much)¶

- Graph too big for RAM? You could try the experimental on-disk mode, but expect lower performance.

- Need high availability? Memgraph supports replication and High Availability (Enterprise Edition).

- Want vector-native features? Memgraph’s built-in vector search works well for smaller embeddings. For huge embeddings, pair with a dedicated vector DB (preferably one that doesn’t have a 9-figure marketing budget).

It’s not magic. It’s just designed like it’s 2025 and not 1995.

Final Thought: Just Try It¶

If you’re building RAG systems or doing anything graph-related, and you want your queries fast, your setup sane, and your dev experience not soul-crushing—give Memgraph a spin.

Try it for free. It runs in Docker. It has a Playground. It might even make you like databases again. They have a cloud solution that doesn’t force you to hire that guy who knows “Kubernetes” after watching a Fireship video.

🔗 Interstellar Communications

No transmissions detected yet. Be the first to establish contact!

Related Posts

Prompts in MCP: Preloaded Sanity, Not Just Fancy Slash Commands

MCP Prompts aren't just templates—they're server-defined, discoverable scripts that bring preloaded sanity to your AI development workflow.

If You Think MCP Is Just a Tool Registry, You’re Missing the Point

Model Context Protocol (MCP) isn't just a tool registry—it's a paradigm shift that turns AI assistants into true development partners.

Helmsman: Stop Writing AGENTS.md That Lies to Half Your Models

Your static instruction file works for Claude Opus and breaks for Claude Haiku. Helmsman serves model-aware instructions that adapt to capability tiers, environment, and project context.